How we're steered towards false belief

Our brains do a pretty bad job of reasoning, and a great job of maintaining and strengthening false belief.

A while back I volunteered to contribute to a book on the behaviours and history of political, legal, and socio-economic systems. It was to be a primer for people creating products with the potential to disrupt those systems. My contribution was a chapter on confirmation bias, detailing its effects, its workings, and how it can be overcome. Though the book was never published, my research had me reconsidering my behaviour. Always careful with my words, I started speaking even more purposefully, not wanting to pass bias on to others. The experience had such an impact that I couldn't let my chapter sit unread, and split it into three articles. The first speaks to the pernicious influence of confirmation bias. This is the second of the three, describing how it grows and spreads. Finally, the last article explains what we can do to fight confirmation bias.

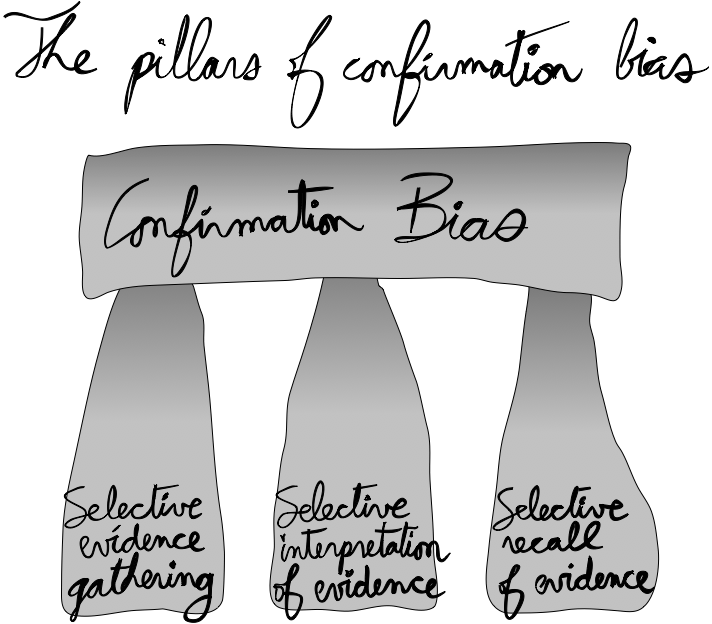

Confirmation bias is one of a number of cognitive biases which affect how we reason. It tricks us into accepting untruths and nurtures them until we're certain they're true. As a result, we're led to hold false beliefs with greater confidence than evidence can justify. Confirmation bias doesn't happen by itself. It needs agreeable conditions to grow, flourish, and persist. Our tendency to selectively gather, interpret, and recall information provides fertile ground for confirmation bias to take hold (See Fig. 1).

If we don't actively choose our information or how we interpret it, how does bias start? These tendencies operate while leaving our learning and reasoning process intact. We don't see our bias. We feel we're being rational, and we often are, but with skewed information. Every stage of belief development is affected, from initial hypothesis generation, to searching for, testing, interpreting, and recalling evidence.

Our selective gathering of evidence often starts when we form an initial belief from weak evidence. Have you ever firmly believed something to be true only to find out – years later – that it had no basis in reality? Maybe you forgot how you had come to believe in something which, under scrutiny, you later realised was completely false. How could you have been so wrong? Chances are you took something you heard or read at face value and carried it for years. This initial hypothesis generation is where confirmation bias starts to take hold.

Governed by something known as anchoring, that initial belief is powerful and can take root in our brains. Information acquired early carries more weight and is more readily recalled. First impressions matter. Belief starts to collect around those first pieces of information. With belief backed by initial weak evidence, we may have problems correctly interpreting better — possibly contradictory — information received later on.

With that kernel of belief in mind, we'll gather evidence to support it. This isn't to say we actively seek out that evidence, although we sometimes do. Rather, our brains tend to more readily take in information which supports our belief. Have you ever bought or considered a car, an item of clothing, or other object, and then suddenly started noticing the same make, model, or style much more often? This tendency is called the frequency illusion, and it plays a part when you're subconsciously gathering evidence to support a belief. We see what we seek.

Frequency illusion is only one of many tendencies which focus our attention on confirming evidence. Many of them are holdovers from when our evolutionary ancestors had to flee from danger. It didn't matter much if that danger wasn't a danger at all. Humans evolved with great pattern recognition skills — skills which also make us see patterns where there are none (i.e. false positives). Thanks to something called illusory correlation, we tend to see connections between unrelated events. People often stereotype certain groups — cultural, political, or otherwise — as being bad people, a drain on society, or simply "idiots". They probably started with an unfavourable belief about that group and likely later noticed members of that same group exhibit bad behaviour. They saw what they wanted to see, and failed to register the times they saw members of that group not demonstrating similar behaviour.

Gathering evidence isn't always enough. We usually test our beliefs to see if they hold. These tests are often flawed, however. For one, we're more likely to ask questions whose answer is "yes" should our hypothesis be true. In one study on test selection, participants were given a profile of someone described as either an extrovert or an introvert. Subjects were then asked to interview that person and determine if they fit the labeled personality type. Participants usually picked questions which, if answered with "yes", would strongly confirm their profile, and strongly disconfirm it if answered with "no". For instance, someone given a profile flagged as belonging to an extrovert might have asked "Do you enjoy large parties?" but not "Do you enjoy time alone?". This reinforcement of our initial belief through positive tests leads us to be more confident in our belief, even if the information we collect has no value.

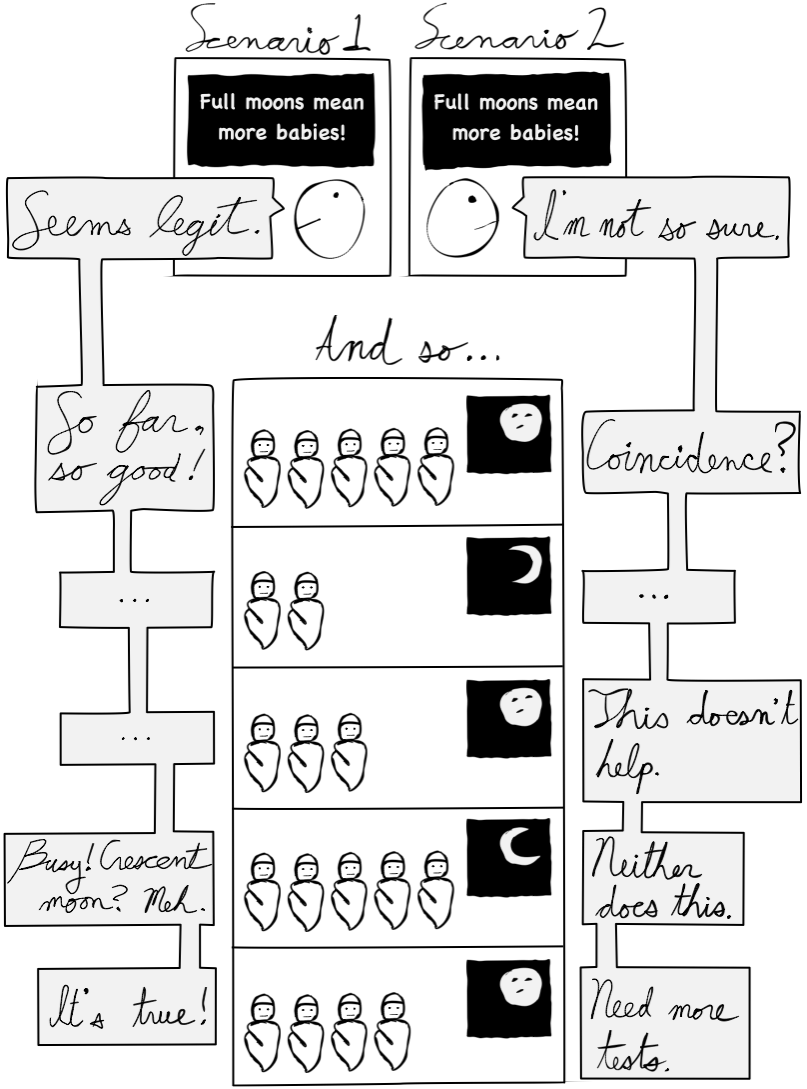

This tendency to seek and test largely positive evidence can uncover patterns which may not exist, limiting discovery. These tests can confirm belief but will not uncover false negatives. Using Wason's 2-4-6 task from part 1 of this series as an example, subjects tested their theory by picking three numbers which fit it, not three numbers which fit a different but also valid theory, nor numbers which didn't fit their theory at all. For example, someone who believed that a 2-4-6 sequence represented even numbers increasing by two might have tested it with 8-10-12, but not 3-5-7, nor even 6-4-2. When we rely on largely positive evidence, we fool ourselves into a false belief (see Fig. 3).

Test results need to be interpreted to be useful. Confirmation bias kicks in here as well. Evidence and tests which confirm our belief are prone to be seen as reliable and relevant, and are often accepted at face value. By contrast, evidence which disagrees with our belief is often seen as unreliable, unimportant, and open to scrutiny — often hypercritically, especially if the source is believed to be subject to error. When evidence is ambiguous, vague, or open to interpretation, we unfortunately tend to give our beliefs the benefit of the doubt. As an example, a teacher might interpret a student's non-standard answer to a question as either stupid or creative, depending on how the teacher feels about the student beforehand. (Recall the study in part 1 of this series of a girl playing in urban and suburban neighbourhoods.)

When we overweigh or underweigh evidence in this way, we usually require less of it to uphold a belief than we require evidence to reject one. Other factors are at issue, such as our degree of confidence in our belief, the value of making a correct conclusion, or the cost of making a bad decision. Our motivation for truth, however, can still be outweighed by our need for self-esteem, approval from others, need for control, and the internal consistency that confirming evidence provides. In many cases, it may be more important for us to maintain our belief than to be accurate. Being wrong is painful and often seen as undesirable, exhibited by the expectation that we "have the courage of one's convictions."

Interpreting tests and evidence can be quite challenging. We feel we're impartial and open, that we adjust our belief accordingly, but the opposite is often true. For one, most of us have trouble with statistics and probability. Even the most analytical minds fail to properly consider the odds a particular belief is true given confirming evidence, with its own occurrence probability (see Bayes Theorem). Similarly, we rarely consider the odds of disconfirming evidence or alternative beliefs being correct. Most people also fall prey to a number of logical fallacies and generalizations, which hinder the way we interpret tests and evidence.

The feedback loop between evidence gathering, testing, and interpretation is ongoing. How we test and interpret our evidence governs how we see new evidence. We can quickly grow more confident in our belief, interpreting even ambiguous evidence as supporting it, all with an internally coherent pattern of reasoning. This confidence can make it painful to give up our beliefs. We become more likely to question information which goes against them than information which agrees with them. Searching for and interpreting evidence, then, can be an internal fight between what is right and what feels good. Confirmation bias is not a simple error, but an internally coherent pattern of reasoning.

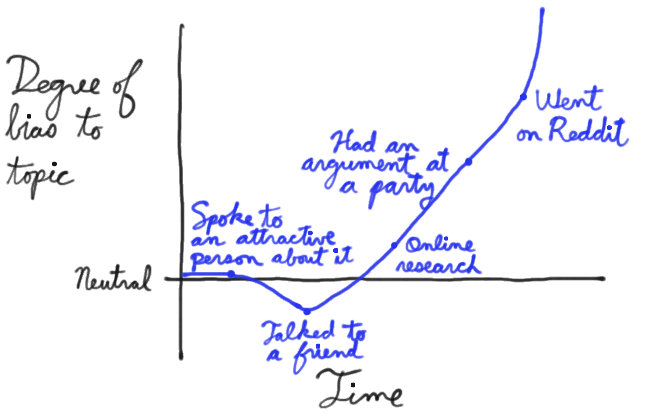

Confirmation bias grows and persists by way of a number of tendencies. To combat our bias, we need to understand how our beliefs can be so easily skewed by it. Another way to do so is to think about our belief formation as influenced by a series of signals. We're constantly receiving signals of the true state of the world, through our senses and our interactions with it. Learning of something online, watching a video clip, speaking with someone outside our circle — signals like these influence our belief. A rational observer who perfectly rates each signal and applies it to her beliefs would, after an infinite number of signals, always attain near-certain belief.

Few of us are perfectly rational, however. We may start our decision-making process believing that two sides to an issue are equally valid. This may change as soon as we receive our first signal. As with the primacy effect, that first signal may completely determine our final belief. Once we begin leaning towards a belief, we may misinterpret further signals which conflict with it. We may ignore or underweigh a conflicting signal, or overweigh one which agrees with our belief. Under bias, our belief formation may quickly become a feedback loop. Every signal we receive may be used to defend or justify our position. Pretty soon, we're like a boat that's drifted off-course.

The existence of our bias inhibits our ability to overturn false belief. If severe enough, further disconfirming signals may worsen our bias. Even after an infinite number of signals, our bias may compel us to believe with near-certainty in an incorrect belief. Chances are, though, that we'll become convinced of our own belief and stop paying attention to further signals. After processing a number of signals, our belief may go from feeling natural, to feeling incontestable.

Given that tendencies supporting bias largely happen subconsciously and are entrenched in our brains, can we do something about it? Can we debias ourselves? Or are we powerless to continue making bad decisions based on selective processes? Awareness is the first step, but what about debiasing other individuals or groups we're a part of? Read on for part three of this series.

References

- [JONES2000] Jones, M., and Sugden, R. (2000). Positive confirmation bias in the acquisition of information. (Dundee Discussion Papers in Economics; No. 115). University of Dundee.

- [NELSON2015] Nelson, J. A. (2015). Are women really more risk-averse than men? A re-analysis of the literature using expanded methods. Journal of Economic Surveys, 29: 566-585.

- [NICKERSON1998] Nickerson, J. S. (1998). Confirmation bias: a ubiquitous phenomenon in many guises. Review of General Psychology, Vol. 2, No. 2, pp. 175-220.

- [KLAYMAN1995] Klayman, J. (1995). Varieties of confirmation bias. In J. Busemeyer, R. Hastie, & D. L. Medin (Eds.), Decision making from a cognitive perspective. New York: Academic Press (Psychology of Learning and Motivation, vol. 32), pp. 365-418.

- [RABIN1999] Rabin, Matthew and Schrag, Joel L. (1999). First Impressions Matter: A Model of Confirmatory Bias, The Quarterly Journal of Economics, 114, issue 1, p. 37-82.